Managing Backtests

Backtests are an important component to building trust in the MMM results. The purpose of backtests is to take new data, run it through an old model, and see how well the old model predicted the new data. Poor backtest performance is indicative that your model isn't capturing the true causal relationship between media and your dependent variable.

Recast backtests work automatically when you build a model. Recast will look for the most recent model deployment with a last modeled day that is 7, 30, 60, and 90 days behind the current last modeled date. If it's able to find them, it will use that model to forecast spend. It will then compare what actually happened to what the model from the past thought would happen.

Backtests can fail to show for a variety of reasons: (a) there are no models that old, (b) the channel set has changed, so the new spend can be fed into the old model, (c) the contextual variables/spikes have changed, etc. In these cases, Recast won't be able to perform the backtests.

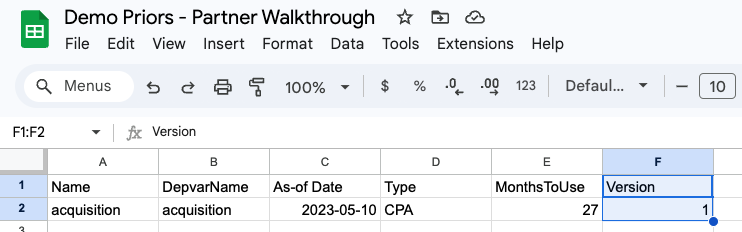

Additionally, there may be times when Recast can perform a backtest, but it may not be desirable. For example, if there was a substantial error in the historical data (e.g. you had previously been modeling $5k/day of Meta spend, but learned it was actually $50k/day), then the model may revise dramatically and it may not be desirable to show these backtests, because the previous model was misconfigured. In this case, you can force backtests to not show by incrementing the Version number on the ModelInfo tab of the Google Sheets prior sheet. This number defaults to 1, and by incrementing it, you ensure that backtests only show for models with matching Version numbers.

In addition to the backtests mentioned above, there is one additional backtest that will always show even when the others fail. This is a 30-day backtest. When Recast estimates your model, it estimates the model through the last day of the data, as well as the data up until 30 days ago. We use this second estimation to make a forecast of the last thirty days. This approach ensures that spend, spikes, channel names, and contextual variables are completely consistent between the two runs, and so this test is more robust and less prone to errors than the other backtests. Unfortunately, we currently cannot run reconciliation (the plot that compares the model then to the model now) for this type of backtest, so these plots are not available.

Updated 7 days ago